THE DOGHOUSE

MAK Vienna

May 10 – September 10, 2023

THE DOGHOUSE & MOSAIK

SPAN 2023

SPAN (Matias del Campo, Sandra Manninger) 2023

Click here and see the commands you can use to interact with the robots

INTRODUCTION

The Doghouse is an installation conceived for the exhibition /Imagine – A Journey into The New Virtual at the MAK in Vienna, Austria. The exhibition presents new positions that deal in different ways with architecture and urban planning in the context of novel and advanced technologies such as ARVR and AI. Utopian, critical, futuristic and playful design strategies form the basis for new narratives, perspectives and possibilities for action in virtual space that can continue into physical reality1.

The installation is based on the extensive research that SPAN (Matias del Campo, Sandra Manninger) have done in the area of Artificial intelligence and Architecture design. This is the first large scale prototype model that demonstrates the possibility to fold 2D images created with Diffusion models such as MidJourney, DALL-E2 and Stable Diffusion into 3D objects. At the same time the installation discusses the cultural implications of this novel technique. Their meaning for the agency, authorship, sensibility and aesthetics of future architecture.

Read more about these ideas here, or here

At the heart of the installation is the idea that meaning is constructed through the use of signifiers – symbols, codes, protocols and other forms of communication that we use to convey meaning. The use of 3D modeling, robotics, and image generation all involve the use of signifiers, each contributing to the creation of a larger narrative about the intersection of sensibility and technology. However, the use of artificial intelligence in the creation of this installation presents a particularly intriguing problem. As AI systems become increasingly sophisticated, they are capable of analyzing and interpreting data in ways that were previously impossible, leading to new forms of creative expression that challenge traditional notions of artistic authorship and intent2, 3, 4.

SIGN, SIGNIFIER AND SIGNAL

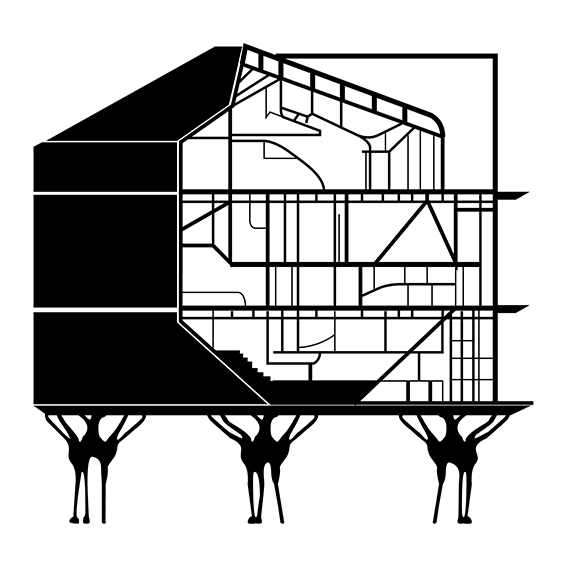

“The Doghouse” is an installation that speaks to the interplay of various signifiers, drawing attention to the complex web of meanings in the context of AI generated content and human perception. The installation is composed of two distinct parts, a large-scale model of a house and a corresponding print, that work together to create a unified visual experience. The 3D model of the house is based on sections generated in the diffusion model MidJourney. Through the use of pixel projection, the 2D images generated by the MidJourney model are transformed into a three-dimensional representation as a mesh model that is both precise and intricate. The resulting structure turns into a signifier in and of itself, a visual representation of the mathematical processes that underpin its creation. In addition it addresses one of architectures mainstays: designing three-dimensional space through sections. In doing so, the installation serves as a commentary on the current condition of the Albertian Paradigm and its significance (or insignificance) for contemporary architecture that engages with machines capable of learning. For this Alberti’s idea of lineamenti5 might be useful. Leon Battista Alberti introduced the concept of lineamenti in his treatise Della Pittura6 (On Painting) in 1435. According to Alberti, lineamenti refers to the underlying geometric structure that supports the visible appearance of an object or form. When speaking about artificial intelligence, the idea of lineament can be applied to the use of algorithms and code to create the underlying structure of digital images and objects. Just as Alberti believed that the lineament provides a foundation for the visible appearance of an object, AI algorithms can provide the underlying structure and logic that supports the creation of digital images and objects.

The Doghouse -SPAN (Matias del Campo, Sandra Manninger) 2023. Iteratively working through the problem of the section. See more of these in our Instagram feed

WHEN PIXELS PROJECT

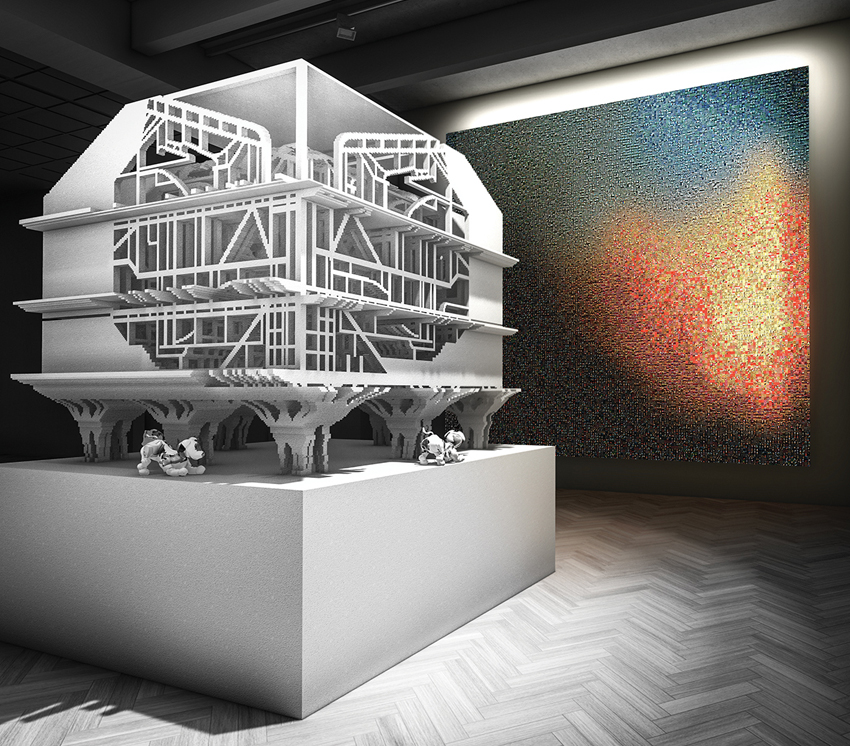

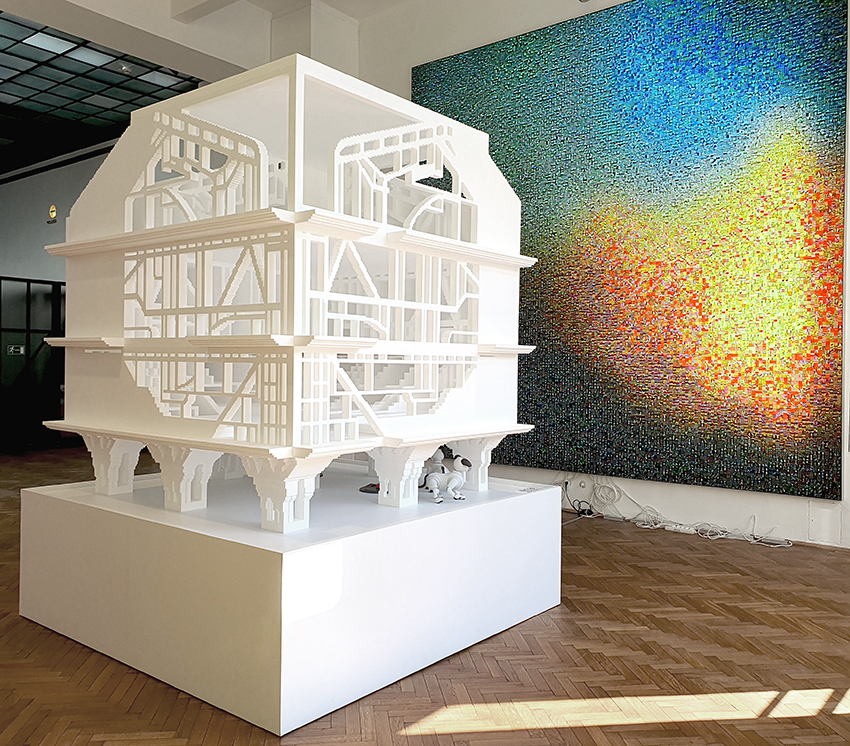

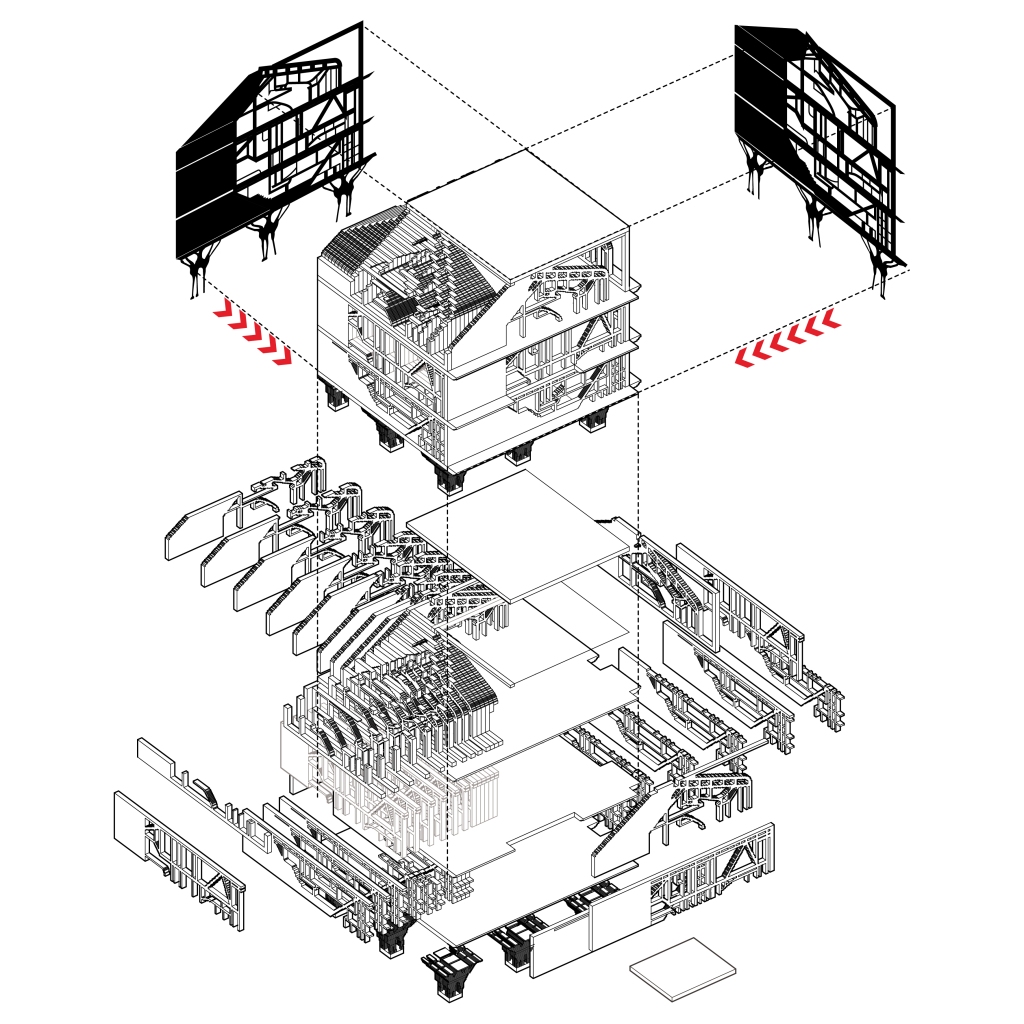

To create the 3D model of the house, pixel projection was used. This method turns 2D images generated by the Midjourney into a 3D model. Pixel projection is a technique used to create 3D models from 2D images. It involves projecting each pixel in an image onto a 3D voxel grid to determine the presence or absence of a voxel at that location. To create a 3D model using pixel projection, the first step is to acquire a set of elevations, sections or plans in 2D. Next, the images are processed to extract relevant features such as edges, corners, and textures, which are used to identify corresponding points across images. Once corresponding points have been identified, pixel projection is used to assign each pixel in an image to a 3D voxel in a voxel grid. This is achieved by computing the ray that passes through the camera focal point and the pixel coordinates in the image plane, and then intersecting the ray with the voxel grid. If the ray intersects a voxel, that voxel is marked as present in the 3D model, otherwise it is marked as absent. After all the sections and plans have been processed in this way, the voxel grid is populated with voxels that represent the 3D shape of the object. The resolution of the voxel grid determines the level of detail of the resulting 3D model, with higher resolutions producing more detailed models. The resulting model was chopped up into pieces that could be CNC milled, creating a precise and intricate structure. The Sony AIBO7 robots that inhabit the 3D model of the house are another layer of signification, adding a playful and interactive element to the installation. The robots move and interact with each other and their environment, creating a dynamic and ever-changing scene that is constantly shifting in meaning. The robots perception -it’s machine vision- is utilized to transfer the perspective of the robot to a large audience via a website that transmits what the robots are seeing. This allows to expand the effect of the installation beyond the boundaries given by the museum’s space.

One of the AIBO Robots populating the doghouse. They can interact with the visitors of the Dog House. SPAN (Matias del Campo, Sandra Manninger) 2023

The large-scale print that accompanies the 3D model is equally significant, calling attention to the way in which architecture is composed of many discrete elements that come together to form a unified whole8. It discusses the part to whole nature of AI applications – from large scale datasets of individual images (or any datapoints for that matter) to the restructuring of the data in the form of images, models or text. The print appears to be a color gradient from a distance, but upon closer inspection, it is revealed to be made up of thousands of images generated by different image generators such as StyleGAN29, Dall-E210, MidJourney, and Stable diffusion11. This complex mosaic of images becomes a signifier for the larger artwork, drawing attention to the intricate and multifaceted nature of the posthuman design ecology we are starting to experience. “The Doghouse” is an installation that speaks to the complex interplay of signifiers that surround contemporary architecture. Through its use of 3D modeling, robotics, and image generation, the installation becomes a visual representation of the various processes and technologies that underpin contemporary architecture production base on learning systems. As such, it invites the viewer to consider the ways in which architecture is composed of many discrete elements that come together to form a unified whole, and to engage with these elements in a playful and interactive way.

Installation “Dog House” & “Mosaik”. SPAN (Matias del Campo, Sandra Manninger) 2023

Roland Barthes believed that meaning was constructed through the interplay of signifiers, or the symbols and codes that we use to communicate12. “The Doghouse” is an installation that embodies this idea, using various signifiers to create a complex and multifaceted work. The 3D model of the house, for example, is a signifier for the underlying mathematical processes that went into its creation. Using pixel projection and CNC milling, the Midjourney model is transformed into a physical structure that is both precise and intricate. The resulting design becomes a visual representation of the complex and often unseen processes that underpin modern technology and artistic expression. In this context, the sign is the concept or idea being communicated, the signifier is the physical form in which it is communicated, and the signal is the actual transmission or communication of the signifier. All three of them are present in the installation – with the communication to the observer of the object being the most elusive one. At least in the physical space, in the virtual it might be easier to measure.

Isometric view of the pixelprojection process and the subdivision into layers for the fabrication process. SPAN (Matias del Campo, Sandra Manninger 2023)

The Sony AIBO robots that inhabit the 3D model of the house are another layer of signification. The robots are themselves signifiers for the intersection of technology and humanity, creating a dynamic and interactive element to the artwork. As the robots move and interact with each other and their environment, they become a signifier for the ways in which technology can transform our understanding of the world around us. The print on the wall that accompanies the 3D model is yet another layer of signification. From a distance, the print appears to be a color gradient, but upon closer inspection, it is revealed to be a mosaic of thousands of images created by various image generators. The use of these generators becomes a signifier for the ever-changing nature of contemporary architecture and technology. The gradient of the print in itself is based on a Diffusion image – the prompt being “Diffusion Gradient”. The feedback between the global appearance in the form of a color gradient and the generated 20.264 images the mosaic is made of, can in itself be read as feedback loop between the information present in the generated images, and the data present in the billions of images used to train the diffusion model responsible for the images. To this end, it is calling attention to the way in which architecture is often of many discrete elements that come together to form a unified whole.

THE CONSTRUCTION OF MEANING

This installation is a piece that speaks to the complex interplay of signifiers that make up contemporary architectural expression. The installation invites the viewer to engage with the artwork in a playful and interactive way, while also encouraging them to consider the complex processes and concepts that went into its creation. Through its use of 3D modeling, robotics, and image generation, the installation becomes a visual representation of the many different codes and symbols that we use to communicate meaning. By inviting the viewer to engage with these elements in a playful and interactive way, the work encourages us to question our assumptions about the nature of architecture and the ways in which it is created and understood. However, the use of artificial intelligence in the creation of the installation raises critical questions about the ways in which meaning is constructed and communicated. As AI systems become increasingly sophisticated, they are able to analyze and interpret data in ways that were previously impossible, leading to new forms of creative expression that challenge traditional notions of artistic authorship and intent.

Mosaik – a Gradient Diffusion Image made of 20.264 AI-generated images – or: twentythreebillionsixhundredandtwentyninemillionninehundredtwentyninethousandandsixhundred pixel (Backlit Print) – SPAN (Matias del Campo, Sandra Manninger) 2023

Roland Barthes theory of semiotics proposes that meaning is constructed through the interplay of the sign and signifier. To these elements, we could add the concept of signal. The sign is the concept or idea being communicated, the signifier is the physical form in which it is communicated, and the signal is the actual transmission or communication of the signifier. In the context of artificial intelligence, the interplay between these elements becomes even more complex. AI systems use algorithms to analyze and interpret data, which they then use to make decisions or predictions. This process involves a complex web of signifiers, signals, and signs, each of which plays a role in shaping the output of the system. For example, when an AI system is trained to recognize images, it uses a complex set of algorithms to analyze the pixels in the image and identify patterns. These patterns become signifiers for the object or concept being represented in the image. The system then uses these signifiers to identify the object or concept being represented, which becomes the sign. Finally, the system outputs a signal in the form of a prediction or classification. However, the use of AI in the creation of architecture adds yet another layer of complexity to the interplay between sign, signifier, and signal. By using image generators such as StyleGAN2, Dall-E2, MidJourney, and Stable Diffusion, architects are able to create unexpected forms of architectural expression, found in the latent space, that challenge traditional notions of meaning and interpretation.

These image generators use complex algorithms to produce images that are based on patterns and structures identified within a dataset. The patterns and structures become signifiers for the underlying concepts and ideas being represented in the image, which in turn become signs. The output of the generator is then a signal that represents the artist’s interpretation of the underlying data. In this way, the interplay between sign, signifier, and signal in the context of AI-generated architecture becomes even more complex, as the meaning of this novel spatial solutions is not only shaped by the underlying patterns and structures within the data, but also by the algorithms used to generate the design itself. Generally speaking, the interplay between sign, signifier, and signal in the context of both semiotics and artificial intelligence highlights the complex ways in which meaning is constructed and communicated. By understanding the various elements that contribute to the creation and interpretation of meaning, we can better appreciate the ways in which art and technology intersect to create new and unexpected forms of expression. “The Dog House” installation provides a thought-provoking exploration of the complex interplay between human behavior, semiotics, and artificial intelligence. By highlighting the ways in which meaning is constructed and communicated through the use of signifiers, the installation raises critical questions about the role of technology in the creative process, and the importance of human interpretation and agency in the construction of meaning.

EXHIBITION CREDITS: /Imagine: A Journey into the New Virtual

PARTICIPANTS

Morehshin Allahyari, Alisa Andrasek, Matias del Campo & Sandra Manninger (SPAN), Alexis Christodoulou, Formundrausch, Genevieve Goffman, Kordae Jatafa Henry, Miriam Hillawi Abraham, iheartblob, Simone C. Niquille (/technoflesh Studio), Lee Pivnik (The Institute of Queer Ecology), Andrés Reisinger (Reisinger Studio), Jose Sanchez (Plethora Project), Space Popular, Studio Mary Lennox, Studio Precht, Charlotte Taylor, Leah Wulfman, Liam Young, Zyva Studio, 2MVD (Damjan Minovski, Valerie Messini)

CURATORS

Bika Rebek, Architect and Principal, Some Place Studio

Marlies Wirth, Curator Digital Culture and MAK Design Collection

EXHIBITION DESIGN

Some Place Studio

SPONSORS

This Installation is supported by the Taubman College of Architecture and Urban Planning, ArtsEngine University of Michigan, and Idee & Design, the Artfactory – Stainz, Styria, Austria.

THE DOGHOUSE

(CNC milled foam and two AIBO Robots)

SPAN (Matias del Campo, Sandra Manninger 2023

PRODUCTION ASSISTANCE:

Sang Wong Kang, Chandana Rao, Brendan Tsai, Aditya Saiswal, Devishi Suresh Kambiranda, Leon Mackow, Ranya Betts-Chen

SPONSORS:

Taubman College of Architecture & Urban Planning | University of Michigan, Center for Academic Innovation – XR, Media Design & Production | University of Michigan, IDEE & DESIGN The Art Factory GmbH

——————————————————————————————————–

MOSAIK

– a Gradient Diffusion Image made of 20.264 AI-generated images – or: twentythreebillionsixhundredandtwentyninemillionninehundredtwentyninethousandandsixhundred pixels (Backlit Print)

SPAN (Matias del Campo, Sandra Manninger 2023

PRODUCTION ASSISTANCE:

Sang Wong Kang, Chandana Rao, Brendan Tsai, Devishi Suresh Kambiranda, Leon Mackow, Florian Zeif, Philipp Ma, Ranya Betts-Chen

SPONSORS:

Taubman College of Architecture & Urban Planning | University of Michigan, Center for Academic Innovation – XR, Media Design & Production | the University of Michigan, IDEE & DESIGN The Art Factory GmbH

REFERENCES

- Exhibition website for /Imagine – A Journey into The New Virtual, Museum of Applied Arts (MAK), Vienna, Austria, https://www.mak.at/en/thenewvirtual (retrieved March 25th 2023)

- Deltorn, Jean-Marc and Macrez, Franck, Authorship in the Age of Machine learning and Artificial Intelligence (August 1, 2018). In: Sean M. O’Connor (ed.), The Oxford Handbook of Music Law and Policy, Oxford University Press, 2019 (Forthcoming) , Centre for International Intellectual Property Studies (CEIPI) Research Paper No. 2018-10, Available at SSRN: https://ssrn.com/abstract=3261329 or http://dx.doi.org/10.2139/ssrn.3261329

- Blaseetta Paul , Artificial Intelligence and Copyright: An Analysis of Authorship and Works Created by A.I., 4 (5) IJLMH Page 2345 – 2361 (2021), DOI: https://doij.org/10.10000/IJLMH.112180

- Flanagin A, Bibbins-Domingo K, Berkwits M, Christiansen SL. Nonhuman “Authors” and Implications for the Integrity of Scientific Publication and Medical Knowledge. JAMA. 2023;329(8):637–639. doi:10.1001/jama.2023.1344

- Hendrix, J. S. (2011). Leon Battista Alberti and the Concept of Lineament. Retrieved from https://docs.rwu.edu/saahp_fp/30

- Alberti, Leon Battista, 1404-1472. Leon Battista Alberti : On Painting : a New Translation and Critical Edition. New York :Cambridge University Press, 2011.

- Fujita, Masahiro. (2001). AIBO: Toward the era of digital creatures. I. J. Robotic Res.. 20. 781-794. 10.1177/02783640122068092

- Karras, Tero, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen and Timo Aila. “Analyzing and Improving the Image Quality of StyleGAN.” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019): 8107-8116.

- Reiser, Jesse and Nanako Umemoto. “Atlas of novel tectonics.” (2006).

- Ramesh, Aditya, Prafulla Dhariwal, Alex Nichol, Casey Chu and Mark Chen. “Hierarchical Text-Conditional Image Generation with CLIP Latents.” ArXiv abs/2204.06125 (2022): n. pag.

- Rombach, Robin, A. Blattmann, Dominik Lorenz, Patrick Esser and Björn Ommer. “High-Resolution Image Synthesis with Latent Diffusion Models.” 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021): 10674-10685.

- Barthes Roland Annette Lavers and Colin Smith. 1968. Elements of Semiology 1St American ed. New York: Hill and Wang.